Responsible AI is as much about the small stuff as it is about the big stuff

Last week, I had the pleasure of presenting a keynote at the AI/ML Technology Leadership Forum. I discussed Responsible AI and shared practical tips for how to practice Responsible AI in your organization.

You can view a video of the presentation here.

💡 Key takeaway

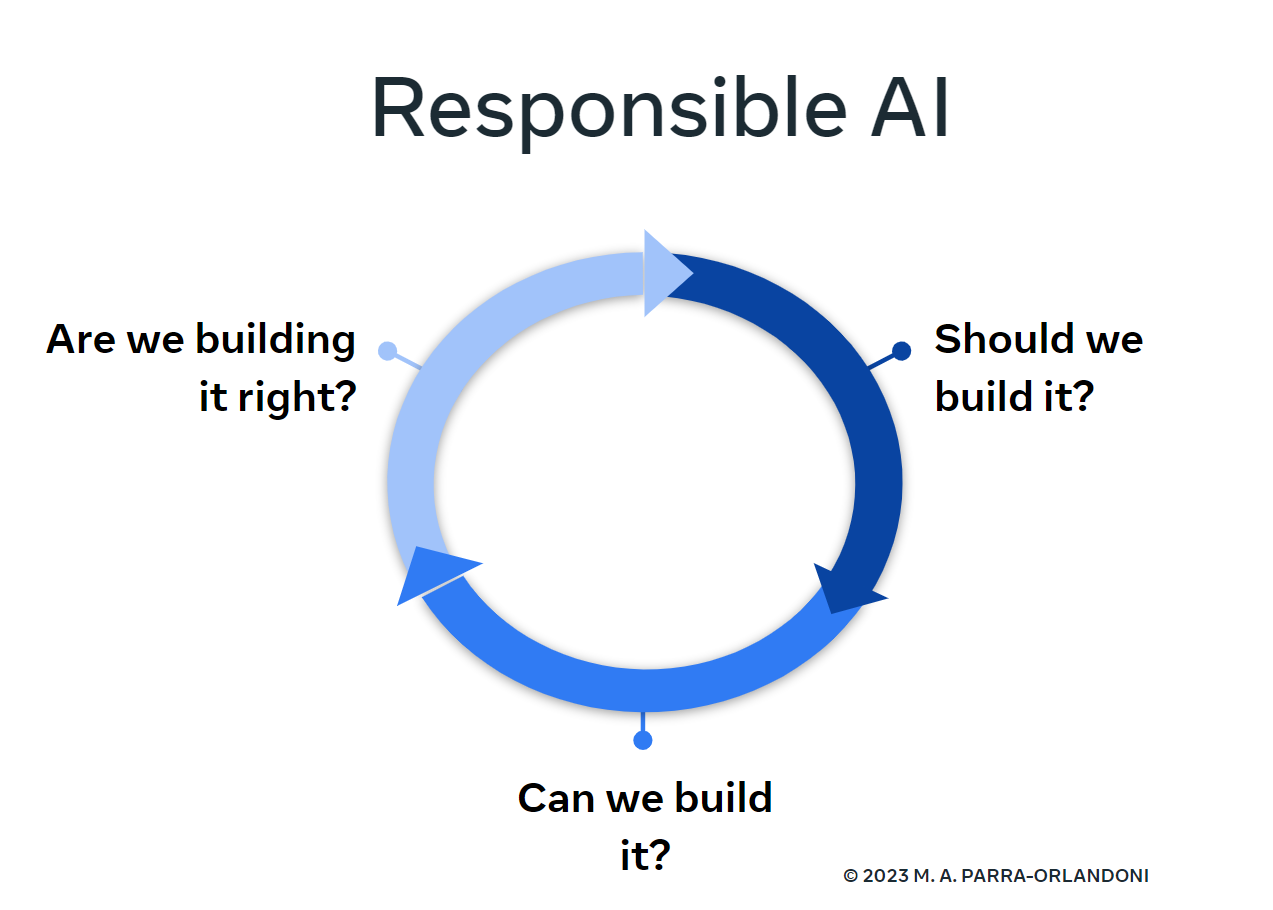

Responsible AI is about day-to-day decisions: Should we build it? Can we build it? Are we building it right? It’s a personal responsibility.

And it's as much about the small stuff as it is about the big stuff.

Below is a summary of some tips I shared during the talk.

*****

💡 Commit to cross-functional collaboration

Responsible AI is one of the most cross-functional topics out there, and this is what makes it super interesting—and super challenging—to implement. What does it take?

✔ Develop internal taxonomies to bridge cross-disciplinary vocabulary gaps.

✔ Ring-fence one or a few projects staffed with tight cross-functional teams. Get these teams beyond coordination and closer to integration. Enable experimentation without being bogged down by past ways of working.

✔ Design incentives for cross-functional work. Unless KPIs, performance reviews, etc., are aligned—collaboration borne of curiosity and goodwill won’t last.

💡 Make it all about driving value

Responsible AI is largely about anticipating and mitigating risks. But this framing can fall short due to a cognitive bias called 'absence blindness', which refers to our difficulty acknowledging what we can’t see. When we successfully mitigate a risk—nothing happens. Absence blindness makes us forget the importance of risk mitigation.

To hack absence blindness, reframe risk mitigation in terms of concrete, tangible value propositions. This helps us both create and protect value—not destroy it. The outcome is a trusted AI product. And in fostering trust, you create a sustained innovation cycle.

To help think in terms of driving value:

✔ Ground yourself in the purpose of the AI to keep value tradeoffs in perspective.

✔ Make sure to consider the full lifecycle of an AI product in your value calculation. Specifically, don’t forget the importance of ML Ops.

✔ Look through the eyes of your various stakeholders. Each sees value differently. In the best case, you can align their views; otherwise, you’ll be empowered to make deliberate and informed value tradeoffs.

💡 Always be learning

This space is incredibly fast-moving. If you aren’t seeking to learn what’s out there, you will miss out on others’ hard-earned lessons. How to do this with a busy schedule:

✔ There are many high-quality Responsible AI voices on LinkedIn, so take advantage and follow them!

✔ If you are driven by what’s in your calendar, write in 15-30 minute calendar blocks for reading articles or for virtual coffee chats with smart colleagues.

✔ Double-task (e.g., commuting, walking your dog, washing dishes) while listening to podcasts.